An Introduction to Genetic Algorithms

Introduction

Genetic algorithms have been applied in a vast number of ways. This discussion is limited to the optimization of a numerical function. Following the convention of computer programs, the problem will be considered to be a minimization. (If you want to maximize, then minimizing the negative of your function is the same thing.)

We start with a bit of terminology. The function that is being minimized is called the objective. The inputs to the function that are allowed to vary in order to arrive at the optimum are called the parameters. (There may be other inputs to the function which are fixed for a given optimization problem.)

The “traditional” approach to optimization in this setting uses a derivative-based approach. Examples are Newton-Raphson and conjugate gradient techniques. The scheme of these algorithms is:

- Hold one solution at a time

- Look locally to see what direction to move in (based on the gradient of the function at the current solution)

- Select the new current solution after deciding how far to move along that path

These algorithms work remarkably well for a great majority of practical problems. We’ll revisit this property later.

When are Genetic Algorithms Useful?

There are at least three situations where genetic algorithms are useful:

- The objective function is not smooth (i.e., not differentiable).

- There are multiple local optima.

- There is a large number of parameters (the meaning of “large” keeps changing).

If the objective function does not have a derivative, then clearly a derivative-based algorithm can not be used. There are several choices of algorithm for a problem like this — genetic algorithms are one. One way to have an objective that is not differentiable is if one or more of the parameters is integer-valued.

Derivative-based algorithms take no allowance of multiple optima — they go to a local optimum near to where they start. If there are likely to be only a few local optima, then using several random starts may be enough to solve the problem.

Derivative-based algorithms often progress slowly when there is a large number of parameters. If the gradient is being evaluated numerically, then each iteration of the optimization requires as many function evaluations as there are parameters. The space to be searched is vast, so a large number of iterations will probably be required. In general, derivative-based algorithms work better as they approach the optimum. So a reasonable strategy for many problems with a lot of parameters is to start with a genetic algorithm, then use the best solution from the genetic algorithm as the start for a derivative-based optimizer.

Random Optimization Algorithms

Genetic algorithms are random algorithms — the course taken by the algorithm is determined by random numbers. This means that if you ask a random algorithm to optimize the same problem twice in exactly the same way, you will get two different answers (usually, unless the exact optimum is found). Sometimes you’d really like to get the same answer to the same question. If so, this advises against random algorithms — though it would be possible to always use the same seed for the random number generator.

Before we describe genetic algorithms, there are two other random algorithms that are worth mentioning — we will meet both of them again later.

The Totally Random Algorithm

The totally random algorithm just generates random parameter vectors, evaluates each one, and saves the best one that it finds. Though it lacks efficiency, it is very easy to program and can be very useful. Sometimes it is good enough — why buy a Ferrari when a bicycle will do? Note that we have already seen a form of this algorithm: use a derivative-based optimizer from several random starts.

Simulated Annealing

In this algorithm one solution is held at a time, and random steps away from this solution are taken. If the random step results in a better solution, then that becomes the new solution about which random steps are taken. As the optimization proceeds, the average size of the steps gradually decreases.

The image for this algorithm is of a substance as it cools. When it is hot, jumps are very energetic — perhaps jumping out of a local minimum. Eventually the substance cools so much that it is frozen in place. Note that we’ve described a simplified version of simulated annealing, but this suits our needs better than the more complex version.

Anatomy of a Genetic Algorithm

In contrast to the other algorithms we have discussed, genetic algorithms contain a population of solutions at any one time.

A genetic algorithm requires three processes:

- A way to select parents

- A mating ritual between the parents (the genetics)

- A survival of the fittest mechanism

The typical algorithm selects two “parents” from the population, and commingles their “genes” to get a new solution. This new solution may or may not enter the population depending on how good its objective is. This is essentially all that genetic algorithms are, even though some presentations try to depict them as complex and mystical. On the other hand, complex mysticism may come into play to get an algorithm to perform well in a particular setting. Probably the single most important aspect is to get the genetics right for the problem at hand.

Performance Not Guaranteed

Genetic algorithms can perform horribly. In particular, the “standard” genetic algorithm is a poor choice. Three aspects of the standard algorithm can be problematic:

- Binary coding of the genetics

- Generational populations

- Inappropriate correlation across parameters in the mating process

The standard algorithm codes the genes as binary strings similar to how DNA codes information in living organisms. This is fine if the parameters really are binary. However, if the parameters are real numbers, then the natural distances between points are destroyed in the transformation.

The standard algorithm has a generational approach to selecting the surviving solutions, again modeled closely on living organisms. The genetics of living organisms, though, are used to establish healthy populations. They are not meant to produce a single superior individual as we desire in optimization. The standard algorithm does not guarantee that the best solution will always survive in the population — this is almost always a bad idea.

The genetic process of the standard algorithm uses a “crossover” as do chromosomes in living organisms. For two parameters that are close to each other in the coding, a child is likely to get both from one of its parents. While for parameters that are far apart, the child is likely to get one from each parent. If the parameters have been transformed to binary, then some pairs of bits should usually inherit from the same parent — but the algorithm will not know which ones those are. If no transformation has been made, then each location should inherit independently of all others. In summary, the standard algorithm does not search the parameter space as efficiently as it might.

All three of these problems are the result of following the natural process too closely. We can be inspired by nature without slavishly imitating it.

S Poetry (pages 329 – 330) compares a form of the standard algorithm with an improved genetic algorithm. (The problem has only binary parameters, so that is not an issue.) The standard algorithm needed an order of magnitude more function evaluations to get similar results (thousands instead of hundreds). The test problem is extraordinarily small — the binary string is only 20 long. Problems of practical size are likely to exhibit even greater differences.

Putting the Pieces Together

Generally the initial population is just a collection of random parameters, though putting in some specific solutions can be very useful. Rather than jumping straight into the genetic algorithm, it is often better to use the totally random algorithm in a start-up phase. If a new solution has a better objective than the worst solution in the population, then the new solution replaces the worst solution. Too much totally-random wastes time because it is inefficient at searching. Too little totally-random wastes time because the genetic algorithm spends too long weeding out the really bad genes.

We have remarked that derivative-based algorithms generally work quite well. In some sense simulated annealing is the random equivalent of a derivative-based algorithm. Simulated annealing is good at searching the space near the current solution. Genetic algorithms are good at finding the better locations on a global scale. Thus we can expect an algorithm that combines genetics and simulated annealing to do better than either alone. The example in S Poetry hints that this is the case.

The BurStMisc R package contains the genopt function that is discussed in S Poetry — an algorithm that incorporates totally random and simulated annealing as just discussed. This function is more in line with the evolutionary algorithm literature and memetic algorithms than traditional genetic algorithms.

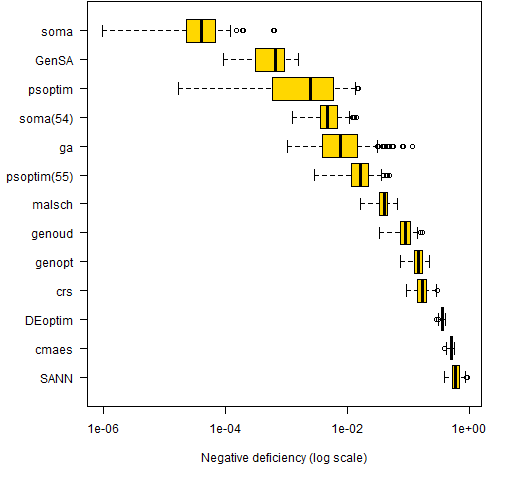

genopt was among the optimizers that competed in “A comparison of some heuristic optimization methods”.

A good treatment of genetic algorithms is:

David B. Fogel (2006). Evolutionary Computation: Toward a new philosophy of machine intelligence. Wiley Interscience.

You will not find an exact algorithm for what you want to do in Fogel’s book. What it does have is ways to think about creating an algorithm, and (perhaps more importantly) ways not to think.